0. Introduction

- Neural Architecture Search : A technique for automating the design of Artificial Neural Network

1. NAS : Neural Architecture Search (Google, 2017)

-

Introduction : Neural Nets are still hard to design. And this paper presents a gradient-based method for finding good architectures.

-

Methods :

-

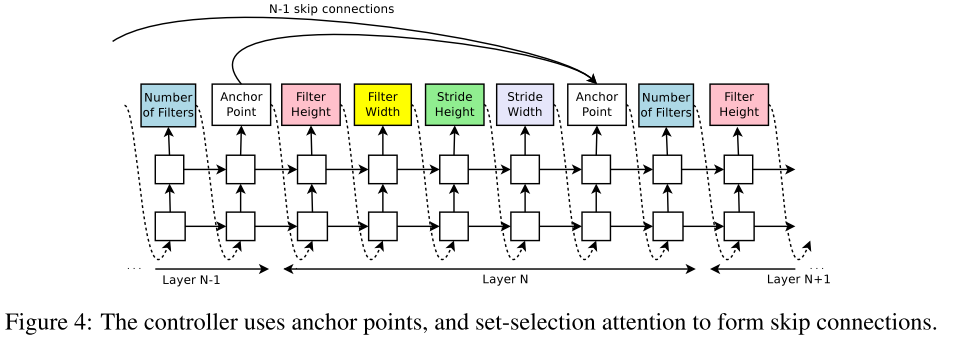

use RNN controller which returns HyperParams of Conv in the order of (FH > FW > SH > SW > NF , Fig4) to generate neural networks. After the RNN builds the desired number of layers, we stop the RNN and train the network from scratch to convergence.

-

train this RNN with reinforcement learning to maximize the expected accuracy of the generated architectures on a validation set.

-

Additionally, set-selection attention is used to form skip connections.

-

-

Results : NAS can design several promising architectures that performs as well as DenseNet for CIFAR10, after the controller trains 12,800 architectures. And it took 28 days for CIFAR10 using 800 GPUs (Nvidia k40).

2. NAS-Net (Google, 2017)

-

Introduction : Applying NAS is computationally expensive. Therefore, authors propose a faster method to search a good architectures on small dataset like CIFAR-10, which can be transfered to larger datasets such as ImageNet.

-

Methods :

-

Main search method used in this work is the NAS frameworks (RNN controller, Reinforcement Learning)

-

The overall architectures of the CNNs are manually predetermined

-

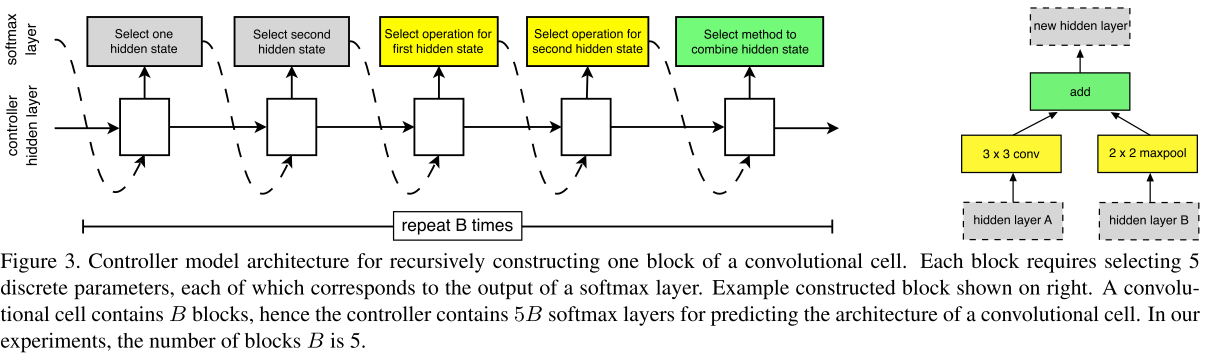

To easily build scalable architectures for images of any size, we need to search two types of cells : (1) Normal cell that returns a feature map of the same size, (2) Reduction cell that returns a feature map is reduced by a factor of two.

-

The search space is 13 predefined operations, not the continuous HyperParams like NAS

-

-

Results : The resulting architectures approach or exceed state of the art performance in both CIFAR-10 and ImageNet. And it took 4 days using 500 GPUs (Nvidia P100).

3. ENAS : Efficient NAS via Parameter Sharing (2018)

-

Introduction : The computational bottleneck of NAS(Net) is the training process of each child model from scratch. In this paper, authors proposed fast and inexpensive approach for automatic model design.

-

Methods :

-

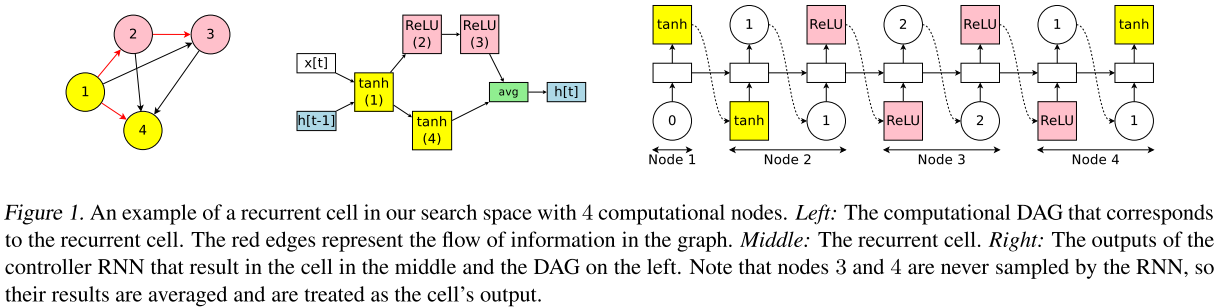

Authors proposed an easier way to represent NAS’s model-building process using DAG (Directed Acyclic Graph). Here, red arrows define a model in the search space.

-

Parameter Sharing : Forcing all child models to share weights to eschew training each child model from scratch to convergence

-

-

Results : ENAS takes less than 16 hours to search for architectures using only one GTX1080Ti.